Jie's placing new flash next to GPU cores work has been accepted by DAC'19

02.05.2019

We propose FlashGPU, a new GPU architecture that tightly blends new flash (Z-NAND) with massive GPU cores. Specifically, we replace global memory with Z-NAND that exhibits ultra-low latency. We also architect a flash core to manage request dispatches and address translations underneath L2 cache banks of GPU cores. While Z-NAND is a hundred times faster than conventional 3D-stacked flash, its latency is still longer than DRAM. To address this short-coming, we propose a dynamic page-placement and buffer manager in Z-NAND subsystems by being aware of bulk and parallel memory access characteristics of GPU applications, thereby offering high-throughput and low-energy consumption behaviors. Congratulations, Jie!

Our low-latency phase change memory architecture proposal has been accepted by DAC'19

02.05.2019

PCM is a promising non-volatile memory technology, as it can offer a unique trade-off between density and latency compared with DRAM and flash memory. Albeit PCM is much faster than flash memory, it is still notably slower than DRAM, which can significantly degrade system performance. In this paper, we analyze a PCM implementation and its engineering sample in depth, and identify the primary cause of PCM’s long latency, i.e., along interconnect (high resistance/capacitance) path between a cell and a sense-amp/write-driver. This in turn requires (1) a very large charge pump consuming: ~20% of PCM chip space, ~50% of latency of write operations, and ~2× more power than a write operation itself; and (2) a large current sense-amp with long time to pre-charge the interconnect path. Then, we propose Low-Latency PCM (LL-PCM). Our analysis shows that LL-PCM can give 119% higher performance and consume 43% lower memory energy than PCM for memory-intensive applications. LL-PCM is only ~1% larger than PCM, as the cost of reducing the resistance/capacitance of the interconnect path is negated by its 4.1× smaller charge pump.

Sungjoon's work (erasure codes for all-flash arrays) has been accepted from TPDS

11.13.2018

Large-scale systems with all-flash arrays have become increasingly common in many computing segments. To make such systems resilient, we can adopt erasure coding such as Reed-Solomon (RS) code as an alternative to replication because erasure coding incurs a significantly lower storage overhead than replication. To understand the impact of using erasure coding on the system performance and other system aspects such as CPU utilization and network traffic, we build a storage cluster that consists of approximately 100 processor cores with more than 50 high-performance solid-state drives (SSDs), and evaluate the cluster with a popular open-source distributed parallel file system, called Ceph. Specifically, we analyze the behaviors of a system adopting erasure coding from the following five viewpoints, and compare with those of another system using replication: (1) storage system I/O performance; (2) computing and software overheads; (3) I/O amplification; (4) network traffic among storage nodes, and (5) impact of physical data layout on performance of RS-coded SSD arrays. For all these analyses, we examine two representative RS configurations, used by Google file systems, and compare them with triple replication employed by a typical parallel file system as a default fault tolerance mechanism. Lastly, we collect 96 block-level traces from the cluster and release them to the public domain for the use of other researchers.

Jie's GPU research fusing new memory has been accepted from HPCA 2019

11.07.2018

Congratulations, Jie! In this work, we propose FUSE, a novel GPU cache system that integrates spin-transfer torque magnetic random-access memory (STT-MRAM) into the on-chip L1D cache. FUSE can minimize the number of outgoing memory accesses over the interconnection network of GPU's multiprocessors, which in turn can considerably improve the levels of massive computing parallelism in GPUs and therefore allow high performance. Specifically, FUSE predicts a read-level of GPU memory accesses by extracting GPU runtime information and places write-once-read-multiple (WORM) data blocks into the STT-MRAM, while accommodating write-multiple data blocks over a small portion of SRAM in the L1D cache. To further reduce the off-chip memory accesses, FUSE also allows WORM data blocks to be allocated anywhere in the STT-MRAM by approximating the associativity with the limited number of tag comparators and I/O peripherals. The paper acceptance rate of ASPLOS this year is 19%.

Our collaborative 3D NAND work has been accepted from ASPLOS 2019

11.7.2018

NAND-based solid-state disks (SSDs) are known for their superior random read/write performance due to the high degrees of multi-chip parallelism they exhibit. Currently, as the chip density increases dramatically, fewer 3D NAND chips are needed to build an SSD compared to the previous generation chips. As a result, SSDs can be made more compact. However, this decrease in the number of chips also results in reduced overall throughput, and prevents 3D NAND high density SSDs from being widely-adopted. We analyzed 600 storage workloads, and our analysis revealed that the small read operations suffer significant performance degradation due to reduced chip-level parallelism in newer 3D NAND SSDs. The main question is whether some of the inter-chip parallelism lost in these new SSDs (due to the reduced chip count) can be won back by enhancing intra-chip parallelism. Motivated by this question, we propose a novel SOML (Single-Operation-Multiple-Location) read operation, which can perform several small intra-chip read operations to different locations simultaneously, so that multiple requests can be serviced in parallel, thereby mitigating the parallelism-related bottlenecks. A corresponding SOML read scheduling algorithm is also proposed to fully utilize the SOML read. Our experimental results with various storage workloads indicate that, the SOML read-based SSD with 8 chips can outperform the baseline SSD with 16 chips. The paper acceptance rate of ASPLOS this year is 21%.

Miryeong wins at Semiconductor Open Innovation Competition (Award of Excellence)!!

10.22.2018

Miryeong received the award of excellence from Semiconductor Open Innovation Competition (SK). Her proposal (with Myoungsoo Jung) entitled by “Introducing a Hybrid Non-Volatile Memory Systems for High Performance GPGPU-based Graph Processing” proposes a GPU architecture that replaces DRAM with PRAM; as there are many disadvantages of PRAM practically, this proposal also change the graph processing algorithms and several applications being aware of internal GPU architecture and PRAM microarchitecture. Specifically, the penalties of PRAM accesses have been removed from user perspective while removing all unnecessary data movement due to the application persistency management. She and her advisor won the competition and achieve $30,000 for the award of excellence.

Joonhyuck wins at Semiconductor Open Innovation Competition (Award of Excellence)!!

10.22.2018

Joonhyuck received the award of excellence from Semiconductor Open Innovation Competition (SK). His proposal (with Myoungsoo Jung) entitled by “RNN-based Machine Learning Methods in an SSD for Efficient Data Reduction and Compression” address the future problem of DRAM internal memory usages when the target SSDs employ 3D NAND and step in a Terabyte-scale storage development area. His proposal integrates a simple RNN and performs machine learning to figure out the operational optimal time. His proposal also includes the technique to compress and decompress the internal data of the underlying 3D NAND flash memory. He and his advisor won the competition and achieve $30,000 for the award of excellence.

Sungjoon wins at Semiconductor Open Innovation Competition (Special Award)!!

10.22.2018

Sungjoon got a special award from Semiconductor Open Innovation Competition (SK). His proposal (with Myoungsoo Jung) entitled by “Holistic Optimizations for High-Reliable QLC-based SSD Storage Systems by Leveraging Redundant Fault-Tolerance Mechanisms in Data Center” remove some redundant error correction or tolerance mechanisms from the datacenter storage thereby improving the overall bandwidth of QLC related devices. While the performance of the QLC-based SSD can be improved by his proposal, it can still maintain the level of reliability of the underlying QLC flash memory and storage system as same of that of the conventional device. Sungjoon and Myoungsoo won the competition and achieve $5,000 for the special award.

Our collaborative work has been accepted from SIGMETRICS 2019

10.9.2018

In this work, we present a software-only approach to reducing data movement costs in both single-threaded and multi-threaded applications. Our approach, referred to as Computing with Near Data (CND), is built upon a concept called ``recomputation'', in which a costly data access is replaced by a few less costly data accesses plus somextra computation, if the cumulative cost of the latter is less than that of the former. If implemented carefully, CND can successfully trade off data access with computation, and considering the continuously increasing latency gap between the two, doing so can significantly reduce the execution latencies of both sequential and parallel application programs. In this paper, we i) quantify the intrinsic recomputability of a set of single-threaded and multi-threaded applications, ii) propose a practical, compiler-driven approach that automatically transforms a given application code fragment to a version that employs recomputation, iii) discuss an optimization strategy that increases recomputability; and iv) compare CND, both qualitatively and quantitatively, against NDC. The paper acceptance rate of SIGMETRICS this summer is 8%.

Jie, Miryeong, Donghyun and Changlim's Linux-kernel optimization and design work has been accepted from OSDI 2018

07.21.2018

Congratulations, Jie and Miryeong! This is an immense achievement! In this paper, we propose FlashShare to assist ULL SSDs to satisfy different levels of I/O service latency requirements for different co-running applications. Specifically, FlashShare is a holistic cross-stack approach which can significantly reduce I/O interferences between co-running applications at a server without any change in applications.

At the kernel-level, we extend the data structures of the storage stack to pass attributes of (co-running) applications through all the layers of the underlying storage stack spanning from the OS kernel to the SSD firmware. For given attributes, the block layer and NVMe driver of FlashShare differently manage the I/O scheduler and interrupt handler of NVMe. We also enhance the NVMe controller and cache layer at the SSD firmware-level, by dynamically partitioning DRAM in the ULL SSD and adjusting its caching strategies to meet diverse user requirements.

Donghyun, Miryeong, Jie and Sungjoon's SSD simulation infrastructure work has been accepted from MICRO 2018

07.19.2018

In this work, we introduce a new SSD simulation framework, Amber (SimpleSSD 2.0), that models embedded CPU cores, DRAMs, and various flash technologies (within an SSD), and operate under the full system simulation environment by enabling a data transfer emulation. Amber also includes full firmware stack, including DRAM cache logic, flash firmware, such as FTL and HIL, and obey diverse standard protocols such as SATA, UFS, NVMe, and OCSSD by revising the host DMA engines and system buses of a popular full system simulator's all functional and timing CPU models (gem5).

Our proposed simulation framework can capture the details of dynamic performance and power of embedded cores, DRAMs, firmware and flash under the executions of various OS systems and hardware platforms. Using Amber, we characterize several system-level challenges by simulating different types of full-system, such as mobile devices and general-purpose computers, and offer comprehensive analyses by comparing passive storage and active storage architectures. The acceptance rate of MICRO this year is 21%. Congratulations!!

Wonil's low-level flash work has been accepted from MICRO 2018

07.19.2018

Motivated by the problem with the traditional coding, we propose a new coding technique, called Invalid Data-Aware (IDA) coding, which reduces the upper and middle bit read latencies close to the lower bit read latency when the lower bit becomes invalid. The main strategy the IDA coding employs is to merge the duplicated voltage states coming from the bit invalidation and reduce the number of (read) trials to identify the voltage state of a cell. To hide the performance and reliability degradation caused by the application of the IDA coding, we also propose to implement it as a part of the data refresh function, which is a fundamental operation in modern SSDs to keep its data safer and longer. With an extensive analysis of a TLC-based SSD using a variety of read-intensive workloads, we report that our IDA coding improves the read response times by 28%, on average; it is also quite effective in devices with different bit densities and timing parameters. The acceptance rate of MICRO this year is 21%. Congratulations!!

Our PRAM-based Persistent-NVDIMM work has been accepted from Flash Memory Summit 2018

05.01.2018

Data is exploding and generated more in just the last year than in the entire previous history of industry. The data volumes are growing faster than ever before and expected to keep increasing more than hundreds Zeta-bytes for the next decade. While this big data explosion can open to new semiconductor business opportunities, memory scaling is unfortunately limited due to a low storage core reliability and significant core-to-core interference. In this talk, we will uncover a prototype of novel NVDIMM-P controller that deals with a new type of Phase Change RAM (PRAM) and discuss several innovative concepts using the PRAM-based NVDIMM-P controller to bridge the gap between storage demand and supply at the data age 2025. The demonstration of this presentation will include our several empirical evaluation results and the potential benefits brought by the controller smart technologies, implemented in an FPGA prototype by being aware of new memory's unique memory-level characteristics and interfaces. Lastly, we will also demonstrate a use-case scenario that automates SSD firmware with our NVDIMM-P controller, which is also implemented in our real FPGA hardware prototype.

Gyuyoung and Miryeong's new memory (PRAM) prototytpe work has been accepted from HotStorage 2018

04.20.2018

We describe a prototype multi-partition aware new memory controller and subsystem that precisely integrates DRAM with 3x nm phase change RAM (PRAM), referred to as BIBIM. In this work, we reveal main challenges of a new type of PRAMs in getting closer to main processors by evaluating our real 3x nm PRAM with persistent memory benchmarks. BIBIM implements hybrid cache logic into a 2x nm FPGA device, which can hide long latency imposed by the underlying PRAM modules as well as support persistent operations. The cache logic of our controller can also serve multiple read requests while writing data into a target PRAM bank by taking into account PRAM’s multi-partition architecture. The evaluation results demonstrate that the read and write latency of our BIBIM is 115 ns and 125 ns, which are 38% and 99% better than a pure PRAM-based memory subsystem. In addition, BIBIM can remove blocking reads by 53%, on average, thereby shortening average write-after-read latency by 48%.

Sungjoon and Changlim's paper has been accepted from HotStorage 2018

04.20.2018

We quantitatively characterize diverse performance behaviors of a real ultra-low latency (ULL) SSD archive by using a real 800GB Z-SSD prototype, and analyze system-level challenges that the current storage stack exhibits. Specifically, our comprehensive study demonstrates i) performance analysis of the ULL SSD, including a wide range of latency and queue examinations, ii) I/O interference characteristics, which are considered as one of great performance bottlenecks of modern SSDs, and iii) the efficiency and challenge analysis of a polling-based I/O service (newly added into Linux 4.4 kernel) by comparing it with conventional interrupt I/O services. In addition to these performance characterizations, we discuss several system-level implications.

Our collaborative research on scale-out distributed storage systems has been accepted from ICDCS 2018

04.06.2018

In this work, we propose a new deduplication method, which is highly scalable and compatible with the existing scale-out storage. Specifically, our deduplication method employs a double hashing algorithm that leverages hashes used by the underlying scale-out storage, which addresses the limits of current fingerprint hashing. In addition, our design integrates the meta-information of file system and deduplication into a single object, and it controls the deduplication ratio at online by being aware of system demands based on post-processing. We implemented the proposed deduplication method on an open source scale-out storage. The experimental results show that our design can save more than 90% of the total amount of storage space, under the execution of diverse standard storage workloads, while offering the same or similar performance, compared to the conventional scale-out storage.

Wonil's parallel GC and flash utilization work has been accepted from HPDC 2018

03.26.2018

Garbage Collection (GC) has been a critical optimization target for improving the performance of flash-based Solid State Drives (SSDs); the long-lasting GC process occupies the flash resources, thereby blocking normal I/O requests and increasing response times. This is a well-documented problem, and a wide range of prior works successfully hide the negative impact of GC on the I/O response times. In this paper, however, we unveil another serious side-effect of GC, called the plane under-utilization problem. More specifically, while a plane is busy doing GC, the other plane(s) in the same die remain idle, as all the planes in a die share a single command and address path that is dedicated to the GC. We also note that most of the state-of-the-art proposals attacking the GC impact on I/O response times are not able to resolve the plane under-utilization problem, and in turn, miss a great potential to further improve the SSD performance. Thus, we next propose a scheduling technique, I/O-parallelized GC, which leverages the idle planes during GC to serve the blocked I/O requests. As a result, flash resources (planes) can be active during the most of GC time and the blocked I/O requests can get serviced quickly, and in turn, an improved SSD performance can be achieved. Using simulation-based evaluations over a wide variety of workloads, we show that the proposed I/O-parallelized GC scheme can improve the response times of the GC-affected I/O requests by 83% (reads) and 70% (writes), by increasing the average plane utilization from the (two planes-per-die) baseline 50% to 74.4% during GC. The I/O-parallelized GC is orthogonal to prior proposals that hide GC overheads; so, they can be combined for further SSD performance improvement. This year, the acceptance rate of The International ACM Symposium on High-Performance Parallel and Distributed Computing (HPDC) is 19%.

Our many-core work has been accepted from PLDI 2018

02.13.2018

Going beyond a certain number of cores in modern architectures requires an on-chip network more scalable than conventional buses. However, employing an on-chip network in a manycore system (to improve scalability) makes the latencies of the data accesses issued by a core non-uniform. This non-uniformity can play a significant role in shaping the overall application performance. This work presents a novel compiler strategy which involves exposing architecture information to the compiler to enable optimized computation-to-core mapping. Specifically, we propose a compiler-guided scheme that takes into account the relative positions of (and distances between) cores, last-level caches (LLCs) and memory controllers (MCs) in a manycore system, and generates a mapping of computations to cores with the goal of minimizing the on-chip network traffic. This year, the acceptance rate of ACM SIGPLAN Conference on Programming Language Design and Implementation (PLDI) is 21%.

Mustafa's 3D NAND flash controller work has been accepted from ACM TACO

02.01.2018

Mustafa's paper entitled by "ReveNAND: A Fast-Drift Aware Resilient 3D NAND Flash Design" has been accepted from ACM Transactions on Architecture and Code Optimization. In this work, we first present an elastic read reference (VRef ) scheme (ERR) for reducing such errors in ReveNAND – our fast-drift aware 3D NAND design. To address the inherent limitation of the adaptive VRef ,we introduce a new intra-block page organization (hitch-hike) that can enable stronger error correction for the error-prone pages. In addition, we propose a novel reinforcement-learning based smart data refill scheme (iRefill) to counter the impact of fast-drift with minimum performance and hardware overhead. Finally, we present the first analytic model to characterize fast-drift, and evaluate its system-level impact. Congratulations, Mustafa!

Jie's flash-based hardware accelerator work has been accepted from EUROSYS 2018

01.23.2018

Jie's paper entitled by "FlashAbacus: A Self-governing Flash-based Accelerator for Low-power Systems" has been accepted from EuroSys 2018. In this paper, we present FlashAbacus, a data-processing accelerator that self-governs heterogeneous kernel executions and data storage accesses by integrating many flash modules in lightweight multiprocessors. The proposed accelerator can simultaneously process data from different applications with diverse types of operational functions, and it allows multiple kernels to directly access flash without the assistance of a host-level file system or an I/O runtime library. The acceptance rate of Eurosys this year is around 16% (our work is one of 43 accepted out of 262 submissions). Congratulations, Jie!

Jie's GPU paper has been accepted from IPDPS 2018

01.22.2018

Jie's GPGPU paper entitled by "CIAO: Cache Interference-Aware Throughput-Oriented Architecture and Scheduling for GPUs" has been accepted from IPDPS 2018. In this paper, we propose Cache Interference-Aware throughput-Oriented (CIAO) on-chip memory architecture and warp scheduling which exploit unused shared memory space and take insight opposite to cache locality-aware warp scheduling. Specifically, CIAO on-chip memory architecture can adaptively redirect memory requests of severely interfering warps to unused shared memory space to isolate memory requests of these interfering warps from those of interfered warps. If these interfering warps still incur severe cache interference, CIAO warp scheduling then begins to selectively throttle execution of these interfering The acceptance rate of IPDPS this year is around 24%. Congratulations!

Our 3D NAND flash paper has been accepted from USENIX FAST 2018

01.01.2018

3D NAND flash memories promise unprecedented flash storage capacities, which can be extremely important in certain application domains where both storage capacity and performance are first-class target metrics. However a block of 3D NAND flash contains many more pages than its 2D counterpart. This increased number of pages-per-block has numerous ramifications such as the longer erase latency, higher garbage collection costs, and increased write amplification factors, which can collectively prevent the 3D NAND flash products from becoming the mainstream in high-performance storage domain. In this paper, we introduce PEN, an architecture-level mechanism that enables partial-erase of flash blocks. Using our proposed partial-erase support, we also discuss how one can build a custom garbage collector for two types of flash translation layers (FTLs), namely, block-level FTL and hybrid FTL. Our paper is one of 23 accepted out of 139 submissions (its acceptance rate is around 16%). Congratulations!

Wonil's paper has been accepted from ICCD'17

09.01.2017

Wonil's paper entitled by "A Scale-Out Enterprise Storage Architecture" accepted by IEEE International Conference on Computer Design (ICCD) 2017. In this work, we propose UT-SSD, a novel enterprise-scale scale-out SSD architecture, which enables the connection of a large number of (1000s) flash chips using the native PCIe buses instead of the conventional channels. We also propose an architectural enhancement that further improves the performance of our base UT-SSD by maximizing flash utilization. Our experimental analysis of UT-SSD with workloads drawn from various domains shows that the throughput of UT-SSD can reach up to 110 GB/s by successfully aggregating the bandwidth of 4096 flash chips. In addition, our proposed enhancement over this base UT-SSD increases the flash utilization by 50.7%, which in turn results in 116% additional throughput improvement. The acceptance rate of ICCD 2017 is 29%.

Donghyun's paper has been accepted from NPC'17 as the top-ranked paper and invited by IJPP

08.06.2017

Donghyun's paper entitled by " Enabling Realistic Logical Device Interface and Driver for NVM Express Enabled Full System Simulations" is accepted by IFIP International Conference on Network and Parallel Computing (NPC). This work implements an NVMe disk and controller to enable a realistic storage stack of next generation interfaces, integrate them into gem5 and a high-fidelity solid state disk simulation model. We verify the functionalities of NVMe that we implemented, using a standard user-level tool, called NVMe command line interface. Our evaluation results reveal that the performance of a high performance SSD can significantly vary based on different software stack and storage controller even under the same condition of device configurations and degrees of parallelism. Specifically, the traditional interface caps the performance of the SSD by 85%, whereas NVMe interface we implemented in gem5 can successfully expose the true performance aggregated by many underlying Flash media. This work will be demonstrated at October 20th-21st 2017, Hefei. This year, NPC acceptance rate is 24% (slightly below 25%) and Donhyun's work is ranked as a top paper at NPC'17 and invited by the Special Issue of International Journal of Parallel Programming (IJPP).

Jie's paper has been accepted from NPC'17

08.06.2017

Jie's paper entitled by "An In-depth Performance Analysis of Many-Integrated Core for Communication Efficient Heterogeneous Computing" is accepted by IFIP International Conference on Network and Parallel Computing (NPC). In this work, we build a real CPU+MIC heterogeneous cluster, which consists of eight main processor cores and 244 physical MIC cores (61 cores per MIC device). We analyze the performance behaviors of the heterogeneous cluster by examining different communication methods such as message passing method and remote direct memory accesses. Our evaluation results and in-depth studies reveal that i) aggregating small messages can improve network bandwidth without violating latency restrictions, ii) while MICs can execute hundreds of hardware cores, the highest network throughput is achieved when only 4 ∼ 6 point-to-point connections are established for data communication, iii) data communication over multiple point-to-point connections between host and MICs introduce severe load unbalancing, which require to be optimized for future heterogeneous computing. This work will be demonstrated at October 20th-21st 2017, Hefei. This year, NPC acceptance rate is 24% (slightly below 25%)

07.29.2017

Miryeong's paper has been accepted from IEEE IISWC'17

Miryeong's paper entitled by "TraceTracker: Hardware/Software Co-Evaluation for Large-Scale I/O Workload Reconstruction" is accepted by IEEE International Symposium on Workload Characterization (IISWC), which is one of the major conferences in CS. This symposium, sponsored by IEEE Computer Society and the Technical Committee on Computer Architecture, focuses on characterizing and understanding emerging applications in consumer, commercial and scientific computing. . In this work, we propose TraceTracker, a novel hardware/software co-evaluation method that allows users to reuse a broad range of the existing block traces by keeping most their execution context and user scenarios while adjusting them with new system information. Specifically, our TraceTracker’s software evaluation model can infer CPU burst times and user idle periods from old storage traces, whereas its hardware evaluation method remasters the storage traces by interoperating the inferred time information, and updates all inter-arrival times by making them aware of the target storage system. We apply the proposed co-evaluation model to 577 traces, which were collected by different institutions and locations of server a decade ago and revive the traces on a high performance flash-based storage array. This work will be demonstrated at October 1-3, 2017 Seattle, Washington, USA

Sungjoon's paper has been accepted from IEEE IISWC'17

07.29.2017

Sungjoon's paper entitled by "Understanding System Characteristics of Online Erasure Coding on Scalable, Distributed and Large-Scale SSD Array Systems" is accepted by IEEE International Symposium on Workload Characterization (IISWC), which is one of the major conferences in CS. To understand the performance impacts and system implications of online erasure coding, we build a real storage cluster that employs more than fifty high-performance SSDs with around a hundred computation cores and evaluate the cluster with a popular open-source distributed parallel file system. In this work, we analyze system behaviors of online erasure codes from the following five viewpoints: i) overall performance characteristics compared to those of a conventional replication method, ii) computation and software overheads imposed by the erasure codes, iii) RS-coded storage volume overheads, iv) private network traffic, which is invisible to a client but exists in the storage cluster, and v) physical data layout impacts on RS-coded SSD arrays. For all these analyses, we examine two representative RS configurations, which are used by Google and Facebook file systems, and compare them with a triple replication that the parallel file system employs as a default tolerance mechanism. Lastly, we collected 54 block-level traces under the real RS-coded cluster, which will be available to freely download. This work will be demonstrated at October 1-3, 2017 Seattle, Washington, USA

SimpleSSD has been accepted from IEEE CAL

05.12.2017

Our paper entitled by "SimpleSSD: Modeling Solid State Drive for Holistic System Simulation" is accepted by IEEE Computer Architecture Letters. This work presents an educational SSD simulation framework models all detailed characteristics of hardware and software, while simplifying the nondescript features of storage internals. In contrast to existing SSD simulators, SimpleSSD can be easily integrated into publicly-available full system simulators. In addition, it can accommodate a complete storage stack and evaluate the performance of SSDs along with diverse memory technologies and microarchitectures, facilitating simulations to explore the full design space at different levels of the system abstraction.

Wonil's paper has been accepted from SIGMETRICS'17

03.23.2017

Wonil's paper entitled by Exploiting Data Longevity for Enhancing the Lifetime of Flash-based Storage Class Memory has been accepted from SIGMETRICS'17. In this work, as an effort to improve flash lifetime, first, by quantifying data longevity in an SCM, we show that a majority of the data stored in a solid-state SCM do not require long retention times provided by flash memory (e.g., up to 10 years in modern devices); second, by exploiting retention time relaxation, we propose a novel mechanism, named Dense-SLC (D-SLC), which enables us perform multiple writes into a cell during each erase cycle for lifetime extension; and finally, we discuss required changes in the flash software management (FTL) in order to use these characteristics for extending the lifetime of solid-state part of an SCM. Using an extensive simulation-based analysis of a flash-based SCM, we demonstrate that D-SLC is able to significantly improve device lifetime (between 5.1X and 8.6X) with no performance overhead and also very negligible changes at the FTL software.

U.S. Government supports Dr. Jung's non-volatile memory research in supercomputing

02.04.2017

As the Principle Investigator of Yonsei University, Dr. Jung has contracted with the United States Government, represented by the Department of Energy (DOE) for the management and operation of the Lawrence Berkeley National Laboratory (LBNL). For around next two years, Dr. Jung's computer architecture and memory system laboratory will conduct certain research work in high performance computing and supercomputer areas, generally identified as design and implementation of FPGA-based non-volatile memory controllers.

Dr. Jung's paper has been accepted from IEEE CAL

01.21.2017

Dr. Jung's paper entitled by "NearZero: An Integration of Phase Change Memory with Multi-core Coprocessor" has been accepted from IEEE Computer Architecture Letters. This paper proposes a novel DRAM-less coprocessor architecture that precisely integrates a state-of-the-art phase change memory into its multi-core accelerator. In this work, we implement an FPGA-based memory controller that extracts important device parameters from real phase change memory chips, and applies them to a commercially available hardware platform that employs multiple processing elements over a PCIe fabric. The overall acceptance rate of IEEE CAL has consistently run at about 25%.

Dr. Jung's collaborative paper has been accepted from ASPLOS 2017

11.10.2016

Dr. Jung's collaborative paper entitled by "Exploiting Intra-Request Slack to Improve SSD Performance" has been accepted from ASPLOS 2017. This paper opens the door to a new class of schedulers to leverage such slack between sub-requests in order to improve response times. Specifically, we present the design and implementation of a slack enabled re-ordering scheduler, called Slacker, for sub-requests issued to each flash chip. Layered under a state-of-the-art SSD request scheduler, Slacker estimates the slack of each incoming sub-request to a flash chip and allows them to jump ahead of existing sub-requests with sufficient slack so as to not detrimentally impact their response times. Slacker is simple to implement and imposes only marginal additions to the hardware. This year acceptance rate of ASPLOS is 17% -- 56 papers were accepted out of 321 submissions.

Mustafa's paper has been accepted from USENIX INFLOW'16

09.16.2016

Mustafa's paper entitled by "Couture: Tailoring STT-MRAM for Persistent Main Memory" has been accepted from USENIX INFLOW. While spin-transfer torque magnetoresistive RAM (STT-MRAM) is a plausible replacement for DRAM, given its high endurance and near-zero leakage, conventional STT-MRAM cannot directly substitute DRAM due to its large cell space area and the high latency and energy costs for writes. In this work, we present Couture - a main memory design using tailored STT-MRAM that can offer a storage density comparable to DRAM and high performance with low-power consumption. See you November at Savannah, USA!

Jie's paper has been accepted from USENIX INFLOW'16

09.16.2016

Jie's paper entitled by "A Design of Read-Oriented STT-MRAM Storage for Energy-Efficient Non-Uniform Cache Architecture" has been accepted from USENIX INFLOW. In this paper, we propose a hybrid non-uniform cache architecture (NUCA) by employing STT-MRAM as a read-oriented on-chip storage. The key observation here is that many cache lines in LLC are only touched by read operations without any further write updates. These cache lines, referred to as singular-writes, can be internally migrated from SRAM to STT-MRAM in our hybrid NUCA. Our approach can significantly improve the system performance by avoiding many cache read misses with the larger STT-MRAM cache blocks, while it maintains the cache lines requiring write updates in the SRAM cache. This work will be demonstrated at Savannah, USA.

Architecture of Next-Generation Parallel File Systems in the NVM-Era

08.14.2016

We will have an invite talk with Dr. Ellis Wilson, who is a team leader covering three of Panasas core product modules. It will be held at VC 204, 10 AM, Aug 16, 2016. This talk will explore some of the classical challenges in PFS design, discuss how those challenges are changing with the advent of faster and denser storage- and memory-class NVM, and explore some of the novel ways PFS can leverage NVM in the future. Non-Volatile Memories (NVM) such as flash storage (in SATA and PCIe incarnations) and memory-class flash such as NV-DIMM have drastically changed the landscape in PFS architecture, and next-generation NVM (e.g., PCM, X-Point, etc) promises to invalidate old challenges and present new ones to an even greater extent. Architecture, design, and implementation of a robust and performant Parallel File System (PFS) is arguably one of the most complex computing storage challenges of the last three decades. Given the long runway, often around ten years, to the development and delivery of a stable PFS, architects in the area must attempt to build flexibility into their design that can cope with coming hardware changes such that the software does not require a complete overhaul.

One of our collaborative research work got into Supercomputing (SC) 2016

06.16.2016

One of our collaborative research work entitled by "Exploring the Potentials of Parallel Garbage Collection in SSDs for Enterprise Storage Systems" has been accepted from Supercomputing (SC) 2016. This year, the acceptance rate of SC is 18%. This paper proposes a novel GC strategy, called Parallel GC (PaGC), whose goal is to proactively run GC on the remaining planes of a flash chip whenever any of its planes needs to execute on-demand GC. The resulting PaGC system boosts the response time of I/O requests by up to 45% (32% on average) for different GC settings and I/O workloads.

Dr.Jung is awarded for NRF's 5 Years Young Researcher Career Program

06.14.2016

Dr. Jung got awarded from NRF for Young Researcher Career. His research entitled by "A Design of Storage-based Next Generation Accelerator for Large-Scale Data Processing" will be supported by national research foundation of Korea for the next five years (2016 ~ 2021). This year, the acceptance rate for total NRF career awards is 35%, and the 5 years program he got is allocated for 10% of among the total NRF career programs. Dr. Jung research advocates a radically different approach to the design of next generation accelerators by bringing accelerator resources and storage resources together, and tailoring these to improve data flow ultimately maximizing performance, power efficiency and reliability.

Miryeong is going to Lawrence Berkeley National Laboratory as summer intern

06.13.2016

Miryeong is going to join Lawrence Berkeley National Laboratory (LBNL) for this summer as an intern. She will conduct research related to Exascale computing and new memory technology in high performance computing (HPC) at Computational Research Division (CRD) of LBNL. LBNL is one of the national laboratory systems supported by the U.S. Department of Energy through its Office of Science. It is charged with conducting unclassified research across a wide range of scientific disciplines. Located on a 202-acre site in the hills that offers spectacular views of the San Francisco Bay, LBNL employs approximately 3,232 scientists, engineers and support staff. The Lab’s total costs for FY 2014 were $785 million.

Dr.Jung paper got accepted from IEEE Transactions on Parallel and Distributed Systems

06.02.2016

Dr. Jung's single author paper entitled by "Exploring Parallel Data Access Methods in Emerging Non-Volatile Memory Systems" has been accepted by IEEE Transactions on Parallel and Distributed Systems. The exploitation of internal parallelism over hundreds of NAND flash memories is becoming a key design issue in high-speed solid state disks (SSDs). In this study, we simulate a cycle-accurate SSD platform with diverse parallel data access methods and twenty four page allocation strategies, which are geared toward exploiting both system-level parallelism and flash-level parallelism, using a variety of design parameters.

Wonil, Jie and Shuwen paper got accepted from NVMSA 2016

06.01.2016

Wonil, Jie and Shuwen's paper entitled by "An In-Depth Study of Next Generation Interface for Emerging Non-Volatile Memories" has been accepted by NVMSA'16. In this work, we model diverse interface-level design parameters such as PCI Express, NVMe protocol, and different rich queuing mechanisms by considering a wide spectrum of host-level system configurations to explore NVMe with assorted user scenarios. We also assemble a comprehensive memory stack with different types of emerging NVM technologies, which can give us detailed NVMe related statistics like I/O request lifespans and I/O thread-related parallelism. Our evaluation results reveal that, i) while NVMe handshaking is light-weight for flash memory that uses block-based accesses (Block NVM), it can impose tremendous overheads for memristor technology (DRAM-like NVM), ii) in contrast to the common expectation, the performance of an NVMe-equipped system may not improve in a scalable fashion as the queue depth and the number of queues increase, and iii) more- and deeperqueue systems atop a Block NVM can significantly suffer from tremendous host-side memory requirements, whereas a DRAMlike NVM can cause frequent system stalls due to NVMe’s inefficient interrupt service routine.

Miryeong's paper got accepted from ASBD@ISCA 2016

05.30.2016

Miryeong's paper entitled by "A Study for Block-level I/O Trace Reconstruction on All-Flash Arrays" has been accepted by ASBD@ISCA 2016. In this work, we build a high performance storage node with an all-flash array that employs multiple non-volatile memory express (NVMe) based solid state disks (SSDs), and reconstruct hundreds of block I/O traces using the storage node. We quantitatively analyze new I/O behaviors of the all-flash array, including user idles and system interval times, and show the remarkable differences between our reconstructed traces and the original ones in diverse perspectives. Our empirical analysis indicates that, while the block-level I/O traces are widely used in many performance models and simulation studies in an attempt to research the properties of future SSD and flash array systems, it is required to reconsider their models with new traces. In addition, our evaluation results show that each SSD volume in a storage node experiences the different trend of system interval times, which in turn can be used for a further optimization in developing a high performance storage system.

Mustafa is going to Intel

05.26.2016

Mustafa is going to join Intel from this Summer. As a CAMEL member, he greatly improved his research capability for past two years and performed studies related with emerging non-volatile memory technologies, including 3D NAND and MRAM. He also secured several key research vehicles related to machine learning in architecture. We believe that this is good for him and for Intel. As a software engineer, he will start explore different types fo approximation algorithm and research machine learing further. Good job, Mustafa!

New PCIe research results are available on IEEE Transactions on Computers

03.26.2016

Dr. Jung's single author paper entitled by "Exploring Design Challenges in Getting Solid State Drives Closer to CPU" is now available IEEE Transactions on Computers (Issue: 2016, April 1). In this paper, he quantitatively analyzes the challenges faced by PCIe SSDs in getting flash memory closer to CPU, and study two representative PCIe SSD architectures (from-scratch SSD and bridge-based SSD) using state-of-the-art real SSDs with his in-house resource analyzer and dynamic evaluation platform. This paper's experimental analysis reveals that 1) while the from-scratch SSD approach offers remarkable performance improvements, it requires enormous host-side memory and computational resources; 2) the performance of the from-scratch SSD significantly degrades in a multi-core system; 3) bridge-based SSD architecture has a potential to improve performance by co-optimizing their flash software and controllers; 4) PCIe SSDs’ latencies significantly degrade with their storage-level queueing mechanism; 5) tested PCIe SSDs consume 200 ~ 500 percent more dynamic power than a conventional SSD; and 6) the power consumption increases by 33 percent, on average, when PCIe SSDs suffer from the performance drops caused by garbage collections.

Read more: [ paper (IEEE link) ]

New NANDFlashSim is now available on ACM Transactions on Storage

02.08.2016

Our paper titled by “NANDFlashSim: High-Fidelity, Micro-Architecture-Aware NAND Flash Memory Simulation” is now avaialble on ACM Transactions on Storage (Volume 12 Issue 2, February 2016). In this work, we introduce NANDFlashSim, a high-fidelity, latency-variation-aware, and highly configurable NAND-flash simulator, which implements a detailed timing model for 16 state-of-the-art NAND operations. Using NANDFlashSim, we notably discover the following. First, regardless of the operation, reads fail to leverage internal parallelism. Second, MLC provides lower I/O bus contention than SLC, but contention becomes a serious problem as the number of dies increases. Third, many-die architectures outperform many-plane architectures for disk-friendly workloads. Finally, employing a high-performance I/O bus or an increased page size does not enhance energy savings. Our simulator is available at http://nfs.camelab.org.

Read more: [ NANDFlashSim repo ] [ paper (ACM link) ]

CAMEL welcomes new researchers and a Ph.D. student

01.04.2015

We are very happy to welcome Jaesoo Lee, Gyuyoung Park and Miryeong Kwon to CAMEL. Jaesoo Lee earned Ph.D., M.S. and B.S. from Seoul National University. In addition to this academic career, he has a great industrial experience at Samsung Electronics and The-AIO. He researched and developed emerging solid state disks and non-volatile memory technologies for around 10 years, as a principal engineer and team leader. Gyuyoung Park earned M.S. and B.S. from Georgia Institute of Technology and Korea University. He also spent 5 years at LG Electronics. Miryeong Kwon is starting graduate school this year. She earned B.S. from Yonsei Univeristy and graduated at Incheon Science High School. There is a combination of "Welcome" and "Good luck" in order for all new members!

New research vehicle for all-flash array based high performance computing

12.25.2015

We are pleased to have KANDEMIR, a HPC testbed that employs heterogeneous processors and construct its storage system with only NVM/SSD devices. The current development is in PHASE1, which initially has around 472 Haswell-EP Xeon processor cores, 10,167 coprocessor cores and 3.5TB DRAM on an all-flash array system. In this research tool, we're in analysis of a wide spectrum of flash-based storage workloads and in tailoring a parallel file system (Lustre) to accommodate the underlying high performance SSD systems. In this phase, we're building up the all-flash array systems with sixty different NVM Express based SSD devices and two hundreds SATA-based high performance SSD devices. Basically, we plan to build up Kandemir with around 3,000 heavy processor cores and more than 50,000 coprocessor cores. All these cluster testbed cores will be connected to around 600 flash-based SSD systems and a few FPGA-based NVDIMM systems that we are designing.

Our recent PCM work has been accepted from HPCA 2016

11.10.2015

Our paper entitled by "DUANG: Lightweight Page Migration and Adaptive Asymmetry in Memory Systems" has been accepted from HPCA'16. In this work, we propose resistive memory architecture sharing row buffers between a pair of neighboring banks, enabling two attractive techniques. Our architecture allows systems to dynamically partition the shared row buffers between the two banks based on the memory access patterns. We expect that this technique can improve the performance of a system running multi-program workloads by up to 10%. In addition, for a given asymmetric memory system, our architecture supports very lightweight page migra-tions between slow and fast banks. This technique can capture on average 94%-97% of the potential performance of a memory architecture comprised of only fast banks. DUANG has been achieved by collaborating University of Illinois Urbana-Champaign. This year acceptance rate of HPCA is 22% (over 240 papers).

New Project will be supported by Ministry of Science, ICT and Future Planning

11.01.2015

Our lab has been selected for Next Generation Information/Computing Technologies research, supported by Ministry of Science, ICT and Future Planning (MSIP). The main goal of this MSIP research project is to secure CORE software technologies and components for future large-scale computing systems. We plan to explore a new storage solution and an energy efficient I/O acceleration for next generation data processing and computing systems. This project will be performed by collaborating with many other research universities, SNU, KAIST and POSTECH, and it will be supported by MSIP for the next five years (2015~2020). We’re so excited to bring new research vehicles and dig deeper on next generation storage solutions!

ACM SRC Award

10.23.2015

Jie who works with Dr. Jung got awarded from ACM Student Research Competition (SRC) this year as the second runner. SRC is the research offers a unique forum for undergraduate and graduate students to present their original research at well-known ACM sponsored and co-sponsored conferences before a panel of judges and attendees. Usually, it requires two phases competition. All students student will be evaluated by a minimum of three faculty members and professional computer scientists attending the conference activities, none of whom are affiliated with the student's university. For ACM SRC this year in PACT, he demonstrated one of our undergoing research topics, entitled by Integrating 3D Resistive Memory Cache into GPGPU for Energy-Efficient Data Processing

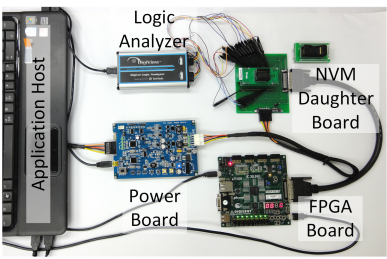

OpenNVM debuts in ICCD 2015

10.22.2015

We demonstrated our OpenNVM project at New York. One of the challenges to explore emerging non-volatile memory (NVM) technologies is that there are no publicly available memory controller and the corresponding IPs. Because of this, prior studies are enforced to rely on limited information or some values made by a simulation. While all these simulation values and results of NVM have a great contribution and lead new architecture and system configuration by applying diverse NVM technologies, based on the different values or information, the conclusions or design choices can significantly vary. Our OpenNVM, open licensed FPGA based memory controllers that provide not only Verilog codes for real Magnetic RAM (MRAM) and Triple-Level cell NAND Flash (TLC) but also Python-based host-side applications to help researchers in exploring new NVM devices under diverse real storage workloads.

Read more: [ OpenNVM repo ] [ paper ] [ slides ]

NVMMU debuts in PACT 2015

10.19.2015

We have successfully demonstrated one of our recent research, NVMMU, which is oriented toward to making an SSD and GPU heterogeneous architecture efficient at PACT’15 (San Francisco). This work intensively analyzes the performance bottleneck on many GPU-accelerated big data analytics; we emphasize on the data movement overheads imposed by not only the redundant data copies between an SSD and a GPU, but also user-mode and kernel mode switches. To address this, we demonstrate NVMMU that can directly forward in/output data from SSD to GPU (and vice versa). Our NVMMU supports a kind of software-defined heterogeneous architecture, which doesn’t require any hardware/software modification of the underlying storage systems or commodity GPU devices.

Read more: [ paper ]

System on Chip for High-Performance Computing Workshop

10.05.2015

Our CoDEx team will offer HPC-SoC workshop at San Francisco that focuses on semi-custom, application-targeted designs, and server processing for HPC and data-centers, with the goal to develop a strategy for an open fabric that is targeted at SoC designs for high-end computing applications. Specifically, our current HPC ecosystem relies upon Commercial off-the-Shelf (COTS) building blocks to enable cost-effective design by sharing costs across a larger ecosystem. Modern HPC nodes use commodity chipsets and processor chips integrated together on custom motherboards. We are embarking upon a new era for commodity HPC where the chip acts as the “silicon motherboard” that interconnects commodity Intellectual Property (IP) circuit building blocks to create a complete integrated System-on-a-Chip (SoC). This approach is still very much COTS, but the commodities are licensable IP for pre-verified circuit designs (the lego-blocks for SoC designs) rather than the chips. It achieves cost-competitiveness because the dominant cost of designing a chip is the cost of verifying the circuit building blocks. The cost benefits derive from the ability to leverage a commodity ecosystem of embedded IP logic components where the non-recurring expense (NRE) cost of designing and verifying a new processor or memory controller design (an IP building block) can be amortized by licensing the technology to myriad embedded applications. The market for licensed circuit IP in the embedded space is much larger marketplace (both in volume and total revenue) than for server chips and the market segment for SoC building blocks is growing at a far faster pace than the current server chip market. HPC system designers should leverage this new avenue for leveraging the cost-advantages of COTS technology. We are excited to see all you guys in San Francisco at October!!

Read more: [ CoDEx ] [ OpenNVM ] [ OpenSoC ]

Jie's work got accepted from ACM Student Research Competition

09.01.2015

Our work titled by "Integrating 3D Resistive Memory Cache into GPGPU for Energy-Efficient Data Processing" has been accepted to 2015 ACM Student Research Competition!! General purpose graphics processing units (GPUs) become a promising solution to process multiple data by taking advantages of massive multithreading. Thanks to thread-level parallelism, GPU-accelerated applications improve the overall system performance by up to 40 times, compared to CPU-only architecture. However, data-intensive GPU applications often generate large amount of irregular data accesses, which results in cache thrashing and contention problems. The cache thrashing in turn can introduce a large number of off-chip memory accesses, which not only wastes tremendous energy to move data around on-chip cache and off-chip global memory, but also significantly limits system performance due to many stalled load/store instructions. In this work, we redesign the shared last-level cache (LLC) of GPU devices by introducing non-volatile memory (NVM), which can address the cache thrashing issues with low power consumption. Jie will demonstrate his work at San Francisco, October.

Our GPU+SSD heterogeneous system paper got into PACT'15

08.24.2015

Our paper titled by "NVMMU: A Non-Volatile Memory Management Unit for Heterogeneous GPU-SSD Architectures" has been accepted from PACT'15. Thanks to massive parallelism in modern Graphics Processing Units (GPUs), emerging data processing applications in GPU computing exhibit ten-fold speedups compared to CPU-only systems. However, this GPU-based acceleration is limited in many cases by the significant data movement overheads and inefficient memory management for host-side storage accesses. To address these shortcomings, this paper proposes a non-volatile memory management unit (NVMMU) that reduces the file data movement overheads by directly connecting the Solid State Disk (SSD) to the GPU. We are excited to see all you guys in San Francisco at October!!

Jie, Gieseo and Mustafa's paper got into ICCD'15

08.02.2015

Our paper titled by "OpenNVM: An Open-Sourced FPGA-based NVM Controller for Low Level Memory Characterization" has been accepted by IEEE International Conference on Computer Design (ICCD'15). Even though, NVM devices are now available from different manufacturers, lack of appropriate NVM controller and evaluation platform in the public domain is the main challenge in extracting empirical data from these real devices. In this paper, we present OpenNVM, an open-sourced, highly configurable FPGA based evaluation and characterization platform for various NVM technologies. Through our OpenNVM, this work reveals important low-level NVM characteristics, including i) static and dynamic latency disparity, ii) error rate variation, iii) power consumption behavior, vi) correlationship between frequency and NVM operational current. All FPGA source code and detailed information of our hardware design will also be available to be downloaded for free. Please check the following website we're preparing.

Read more: [ OpenNVM repository ]

Our DSP-based SSD Emulator and FGPA-based NVM controller have been debuted at FCRC 2015

06.21.2015

We successfully demonstrated our on-going projects, i) hardware emulation SSD platform and ii) FPGA-based NVM controller at Portland FCRC'15. The DSP-based hardware emulation platform is expected to be an excellent research vehicle, which can satisfy for both industry and academia demands in exploring full design system spaces ranging from SSD hardware architecture's and operating system's design parameters without a conflict on specific vendor's IP issues. In contrast, FPGA-based NVM controller is used to extract a wide range of NVM device characteristics such as MRAM and TLC. For FPGA-based NVM controller work, we consider making all the materials and sources codes in a public domain soon.

Read more: [ CoDEN ]

Jie, Damian and Erick got paper into ASBD@ISCA 2015

05.02.2015

One of studies that recently we collaborated with Texas Instruments has been accepted from workshop on Architectures and Systems for Big Data held in conjunction with ISCA'15. This paper titled by "A Hardware/Software CoDesign Emulation Platform for SSD-Accelerated Near Data Processing" describes a system to evaluate near-data processing in flash devices by implementing a real hardware prototype on a multi-core system. The prototype appears to be a credible and effective platform for evaluating systems where high-bandwidth storage can be read and processed on its way to the host.

This work will be demonstrated at Portland, Oregon, June.

Read more: [ Program ]

Gieseo got paper into WARP@ISCA 2015

04.28.2015

One of our recent papers, entitled by "NVM-Charade: An Open Sourced FPGA-Based NVM Characterization Scheme" has been accepted by WARP at ISCA'15.

Accurate characterization of real device samples is essential for understanding the true potential of the emerging non-volatile memories (NVMs), and identifying their optimal placement in the memory hierarchy. Unfortunately, most existing publicly-available resources provide performance and reliability data acquired from analytic models or simulation based studies. In this work, we present NVM-Charade, an open-sourced, highly configurable FPGA based empirical data extraction scheme for various NVM technologies. At this juncture, our proposed scheme is a comprehensive evaluation setup with fine-grained user control that extracts reliable endurance, retention, latency, and power data for a real MRAM product.

Read more: [ Program ]

Shuwen is going to Intel

04.03.2015

Shuwen is going to join Intel when she finishes her master this semester. During the past 2 years, she attended our research mentoring program and developed a good research topic associated with a novel GPU thread block scheduling as her first base. In addition, she secured several key technologies in emerging non-volatile memory. We believe that this is good for her and good for Intel. We're little sad though as she is good as gold for all of our lab members. Well done, Shuwen, and the best of luck! We'll miss you a lot.

Jie's WiP report has been accepted from FAST 2015

01.20.2015

Jie's working-in-process report titled by "Shared Non-Volatile Memory Cache for Energy-Efficient High Throughput GPU Computing" has been accepted from USENIX FAST'15. In this work, we redesign the shared last-level cache (LLC) of a modern GPU device by introducing nonvolatile memory (NVM), which can significantly address the cache thrashing issues with low power consumption. Our results show that our baseline NVM-cache improves the the overall IPC performance of a conventional LLC on diverse memory intensive workloads by 10%, while reducing the power consumption of the underlying DRAM and LLC itself by 36% and 55%, respectively. Further, our 3D-stacked NVM-cache improves such performance by 20% with power consumption similar to the conventional cache.

This work has been demonstrated at San Jose, Feb 16~19 2015.

National Science Foundation (NSF) supports Prof. Jung's Host-Assisted, Software-Defined Solid-State Disk project (Single PI)

10.15.2014

Over the past two decades flash-based storage has crept up from a niche and relatively unknown storage technology to the mobile and embedded medium of choice, and made significant in-roads in the laptop and server arenas in the incarnation of Solid State Disk (SSD). Increasingly, many applications use SSDs and trends indicate that SSD usage will grow significantly. However, SSDs are no silver bullet - in reality, the flash firmware in all commercial SSDs is very rigid and highly unadaptable across input/output (I/O) workloads creating sincere challenges which include the added cost of firmware per SSD, firmware inflexibility, and assisting SSD hardware limitations. Prof. Jung's host-assisted, software-defined solid-state disk (HASD) project will address these key issues. The research will investigate how SSDs should achieve the flexibility they need to perform best for a variety of I/O workloads by being software-defined.

Department of Energy (DOE) supports Prof. Jung's non-volatile memory HW/SW codesign project

10.01.2014

To explore impacts of diverse non-volatile memory (NVM) technologies in modern computer architecture and systems, it is required to have fast, high fidelitous and accurate NVM simulation/emulation research tools. Unfortunately, modeling NVM technologies for the broad range of variety is non-trivial research area as there are multiple design parameters and unprecedented device-level considerations. Our lab is in a development phase of several NVM research frameworks, including open-source simulation models, FPGA-based NVM emulators, and hardware validation prototypes. In addition to offering these valuable research vehicles, we also propose a hardware-software codesign environment that will allow application, algorithm and system developers to influence the direction of future architectures, thereby satisfying diverse computing area demands. Prof. Jung NVM projects are supported by U.S. Department of Energy and cooperate with National Energy Research Scientific Computing Center.

CAMEL's SSD Paper in ZDNet

07.31.2014

Our one of recent works has been discussed in ZDNet, which is one of popular business technology news websites (by Robin Harris) -- "Making flash SSDs look like disks isn't easy. In fact, advanced high-performance SSDs use more power and run much hotter than disks. They aren't your father's thumb drive," This article introduces a part of our ongoing series of UT-Dallas research on high performance SSDs, and commends our work for SSD/NVM researchers. The article said "It’s important that we have good data on actual today’s SSD behavior instead of impressions gained years ago with simpler and slower devices. If high-performance SSDs loom large in your planning this paper (UT-Dallas) is well worth a read".

Based on reviewers and researchers' requests, we are preparing other works to reveal the thermal factors and power throttling issues for many different types of SSDs, and one of them is expected to be appeared in a major computer journal.

Read more: [ ZDNet ] [ Paper/Video ]

HIOS Debuts in ISCA 2014

06.21.2014

Our host interface I/O scheduling algorithm (named by HIOS) has been demonstrated in The 41st International Symposium on Computer Architecture (ISCA), which is one of top-tier conferences in computer architecture society. This year, we shared two significant challenges (i.e., garbage collection and resource conflict) that most SSD systems face with other researchers and engineers, and proposed a novel scheduling method that redistributes the GC overheads across non-critical I/O requests and reduces channel resource contention.

Read more: [ IEEE paper ]

Two More Architecture/System Papers Got Accepted

06.20.2014

This week, CAMEL has published an invited journal paper titled by "Exploring the Future of Out-Of-Core Computing with Compute-Local Non-Volatile Memory" to Scientific Programming. Our collaborative research paper titled by "ZombieNAND:Resurrecting Dead NAND Flash for Improved SSD Longevity" also got accepted from MASCOTS 2014. We are looking forward to meet you in Paris, September 2014!.

CAMEL's Four Architecture/System Papers at Top Venues

06.05.2014

We have four architecture papers being presented in big venues, next week. The papers, listed below, will demonstrate what we do for Non-Volatile Memory and GPU at UT-Dallas.

- "Power, Energy and Thermal Considerations in SSD-Based I/O Acceleration," Jie Zhang, Mustafa Shihab, Myoungsoo Jung, USENIX HOTSTORAGE'14

- "HIOS: A Host Interface I/O Scheduler for Solid State Disks," Myoungsoo Jung, Wonil Choi, Shekhar Srikantaiah, Joonhyuk Yoo, Mahmut Kandemir, ISCA'14

- "GPUdrive: Reconsidering Storage Accesses for GPU Acceleration," Mustafa Shihab, Karl Taht, Myoungsoo Jung, ASBD at ISCA'14

- "Area, Power, and Latency Considerations of STT-MRAM to Substitute for Main Memory," Youngbin Jin, Mustafa Shihab, Myoungsoo Jung, MemoryForum ISCA'14

Read more: [ ISCA ] [ HotStorage ] [ ASBD ] [ MemoryForum ]

Mustafa and Karl Got Paper into ASBD@ISCA2014

05.01.2014

Mustafa and Karl's paper titled by "GPUdrive: Reconsidering Storage Accesses for GPU Acceleration" has been accepted from (Architectures and Systems for Big Data) ASBD @ISCA'14!!

In this preliminary study, we analyze two critical performance bottlenecks in GPU-accelerated data processing and then study design considerations to reduce the overheads imposed by file-driven data movements in GPU computing.

Congratulations, Mustafa and Karl!!

Our STT-MRAM Study Has Been accepted from MemoryForum@ISCA2014

04.27.2014

One of our recent studies titled by "Area, Power, and Latency Considerations of STT-MRAM to Substitute for Main Memory" has been accepted from Memory-Forum@ISCA'14!!

In this work he studies diverse device-level parameters of STT-MRAM to make the storage capacity of STT-MRAM comparable to DRAM with better performance as well as power consumption behavior. Under Prof. Jung's supervision, he also presents analytic models to finely tune the thermal stability factor, which is related to STT-MRAM’s magnetic layer and corresponding transistor, and address the challenges that storage-class STT-MRAM faces in replacing DRAM as a working memory.

Jie Got Paper into HOTSTORAGE 2014

04.13.2014

Jie's paper analyzing operating temperature, dynamic power, and energy in state-of-the-art SSDs with high numbers of channels and chips has been accepted in HotStorage'14. This work also brings up some important considerations that storage society needs to pay attention regarding diverse types of modern flash-based SSD.

USENIX HotStorage is one of top venues in memory, storage and file system research area. Congratulations Jie and Mustafa!!. We are looking forward to having discussion on our findings with other architecture and storage researchers in Philadelphia this year!

Prof.Jung Joins Program Committee of IEEE ICCD 2014

04.01.2014

Prof. Jung joins computer systems and applications program committee of ICCD. Basically, ICCD’s multi-disciplinary emphasis provides an ideal environment for developers and researchers to discuss practical and theoretical work covering systems and applications, computer architecture, verification and test, design tools and methodologies, circuit design, and technology.

Prof. Jung and Wonil Got Paper into ISCA 2014

03.25.2014

Our paper describing a novel host interface I/O scheduler has been accepted in ISCA'14. The proposed scheduler, both GC-aware and QoS-aware, redistributes the GC overheads across non-critical I/O requests and reduces channel resource contention. We are so excited to demonstrate our scheduler atop high performance SSD in ISCA, which is one of top-tier conferences in computer architecture society (ISCA, HPCA, ASPLOS, MICRO)!!

Triple-A Debuts in ASPLOS 2014

03.05.2014

Our paper proposing a Non-SSD based Autonomic All-Flash Array (Triple-A), which is a self-optimizing, from scratch NAND flash cluster just successfully debuted in ASPLOS'14. We are proud of demonstrating one of our flash array works at ASPLOS, which is one of top-tier conferences in computer architecture society this year!!

Sprinkler Debuts in HPCA 2014

02.15.2014

Our novel device-level SSD controller (Sprinkler) has successfully debuted in HPCA, which is one of top-tier conferences in computer architecture society!! Our sprinkler targets maximizing resource utilization and achieving high performance without additional NAND flash chips. Specifically, it relaxes parallelism dependency by scheduling I/O requests based on internal resource layout rather than the order imposed by the device-level queue. In addition, Sprinkler improves flash-level parallelism and reduces the number of transactions by over-committing flash memory requests to specific resources.

Prof. Jung Begins Undergraduate Research Mentoring in UT-Dallas

01.10.2014

CAMELab just started to mentor undergraduate students in helping computer architecture and engineering research. The main goals of this undergraduate research mentoring program are i) to provide opportunities exploring diverse research topics in architecture, system and operating system, ii) guide how to perform research, iii) to teach how to use academic research tool such as Gem5, GPGPUsim and NANDFlashSim, and iv) to provide a chance connecting with graduate students to get more practice in performing research. As one of mentoring programming example, Prof. Jung and his students have research cleaning seminar for about 4 hours per week. Registered undergraduate students will explore GPU, SSD, multicore, NUMA, NUCA and emerging memory system research topics demonstrated at the top-tire conferences in computer architecture (e.g, ISCA, ASPLOS, MICRO, HPCA), and will perform computer architecture research with Prof. Jung's help.

Our Out-Of-Core Computing with NVM has been nominated as the best paper in SC 2013

11.13.2013

Our paper describing a future NVM technology for accelerating scientific applications has been nominated as both a best student paper and best paper in SC'13 (Supercomputing'13)!!!

In this work we investigate co-location of NVM and compute by varying I/O interfaces, file systems, types of NVM, and both current and future SSD architectures, uncovering numerous bottlenecks implicit in these various levels in the I/O stack. We present novel hardware and software solutions, including the new Unified File System (UFS), to enable fuller utilization of the new compute-local NVM storage. Our experimental evaluation, which employs a real-world Out-of-Core (OoC) HPC application, demonstrates throughput increases in excess of an order of magnitude over current approaches.

CAMEL Settles Down in Dallas

08.01.2013

Prof. Jung and his student from PennState (Wonil Choi, co-advised by Dr. Kandemir) move to UT-Dallas and begin to investigate and explore reliable, robust, safe and intelligent computer and memory architecture including emerging Non-Volatile Memory (NVM) technology, finding novel technologies to offer all these properties for next-generation many-core, graphic processing unit, persistent memory systems, embedded system, high performance computing and solid state disks.